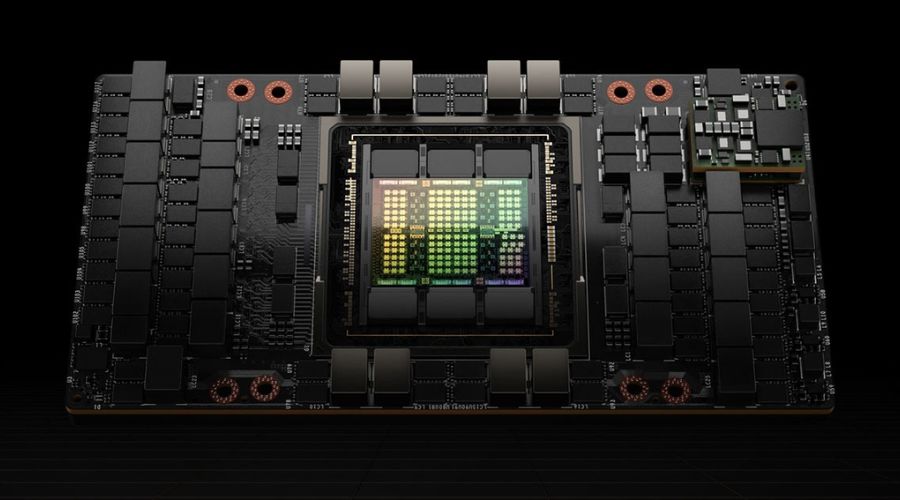

Computer components are rarely anticipated to revolutionize entire enterprises and industries, but Nvidia Corp.’s graphics processing unit, launched in 2023, did exactly that. The H100 data center processor has increased Nvidia’s valuation by more than $1 trillion, transforming the business into an AI kingmaker overnight. It has demonstrated to investors that the excitement surrounding generative artificial intelligence is converting into real income, at least for Nvidia and its key suppliers. The demand for the H100 is so high that some consumers have to wait up to six months to acquire it.

Here are some reasons that Nvidia H100 is a the foremost choice:

1. The Secret Behind H100

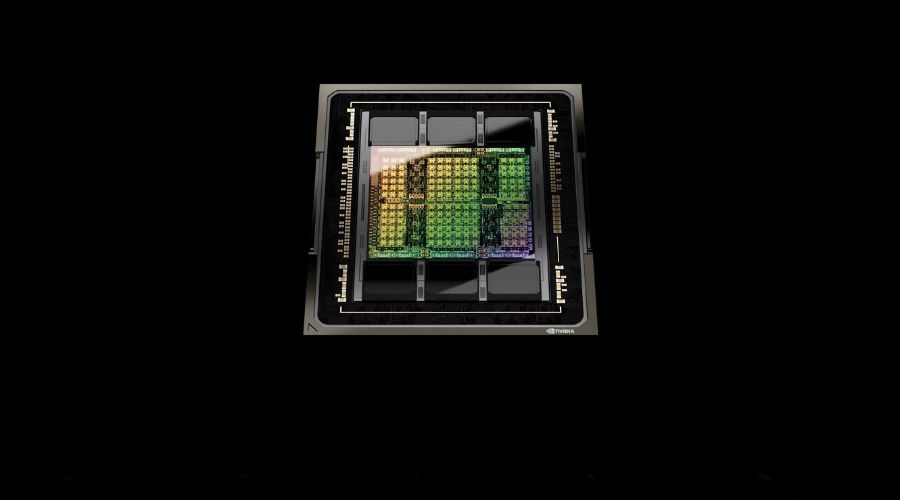

Generative AI platforms learn to do tasks like interpreting language, summarizing reports, and synthesizing graphics by training on massive amounts of preexisting data. The more they observe, the better they develop at tasks such as recognizing human speech and composing employment cover letters. They evolve by trial and error, requiring billions of trials to gain proficiency while using massive amounts of computational power in the process. Nvidia claims the H100 is four times quicker than its predecessor, the A100, in training these so-called large language models, or LLMs, and 30 times faster at responding to human prompts. For organizations scrambling to train LLMs to perform new tasks, a performance advantage can be crucial.

2. How did Nvidia crack the AI code?

The Santa Clara, California-based company is the world’s leading manufacturer of graphics chips, the computer components that generate the visuals you see on your screen. The most powerful are equipped with hundreds of processor cores that run numerous concurrent threads of computation while modeling sophisticated physics such as shadows and reflections. Nvidia’s developers learned in the early 2000s that they could repurpose graphics accelerators for other purposes by breaking down tasks into smaller chunks and working on them concurrently. Just over a decade ago, AI researchers recognized that adopting this type of chip would allow them to put their work into practice.

3. What makes Nvidia stand first in the race?

Nvidia has consistently updated its offerings, including software to complement the hardware, at a rate that no other company has been able to match. The company has also developed a number of cluster solutions that allow its customers to acquire H100s in bulk and deploy them quickly. Chips like Intel’s Xeon processors can handle more sophisticated data, but they have fewer cores and are far slower at processing the massive amounts of data necessary to train AI software. Nvidia’s data center division reported an 81% increase in revenue to $22 billion in the fourth quarter of 2023.

4. How Nvidia Compete With The Big Shots?

With its working AI and creating a language CUDA for graphic chips, let it take an advantage over the big manufacturers like AMD and Intel. Being the second largest makers of computer chips, AMD introduced MI300X which has more memory for handling generative AI workloads. Although their CEO mentioned that they are still in a very “early stage of AI life cycle”. Even Intel is acknowledging the increasing need of data center graphic chips more than the processor units.

5 When’s The Next Release?

Nvidia has consistently updated its offerings, including software to complement the hardware, at a rate that no other company has been able to match. The company has also developed a number of cluster solutions that allow its customers to acquire H100s in bulk and deploy them quickly. Chips like Intel’s Xeon processors can handle more sophisticated data, but they have fewer cores and are far slower at processing the massive amounts of data necessary to train AI software. Nvidia’s data center division reported an 81% increase in revenue to $22 billion in the fourth quarter of 2023.

Conclusion

The NVIDIA H100 is a graphics processing unit (GPU) built for artificial intelligence (AI) applications capable of processing massive volumes of data rapidly. It is the most powerful GPU processor available and is used to train AI models, big language models, and other applications. For more tech updates visit www.thepennywize.com.

FAQs

Q1. What exactly is the NVIDIA H100 utilized for?

A: Nvidia manufactures the H100 graphics processing unit (GPU) chip. It is the most powerful GPU chip on the market and was created exclusively for artificial intelligence (AI) applications.

Q2. How powerful is NVIDIA’s H100?

A: Despite their high power consumption, NVIDIA H100 cards are more power efficient than NVIDIA A100 GPUs. The NVIDIA H100 PCIe variant outperforms the A100, with 8.6 FP8/FP16 TFLOPS/W.

Q3. Why is the NVIDIA H100 so popular?

A: It’s a more powerful version of a chip included in most PCs that allows gamers to enjoy the most realistic visual experience. However, it has been built to handle large amounts of data and computation at high rates, making it an ideal choice for the power-intensive activity of training AI models.